Apple

Designing Future Experimental Products, Interfaces, and Technologies

My talk on “Discoverable Design” at Apple Worldwide Developers Conference WWDC

At Human Interface Design Prototyping team at Apple, I explore new technologies and determine how they function within our current and future products. This is a small exploratory design team of hybrid designers/engineers.

We come up with ideas, invent and propose new features and products through drawing, animation, code, electronics, and models throughout the incubation process.

We design and build working, interactive prototypes to test, explain, and explore ideas for new hardware technologies across all of Apple's products.

We work cross-functionally with apple's user interface design, engineering, marketing, and executive teams to develop prototypes.

Some of the products we have worked on:

Vision Pro, iPhone X, iPad Pro, iPad Mini, Apple Pencil, Apple TV, MacBook Pro, ARKit, Portrait Mode, Animoji, Face ID, Live Photo, 3D Touch, Touch Bar, Taptic Engine, Multitouch Trackpad gestures

Some shipped products that I have led:

Environment, Climate Change

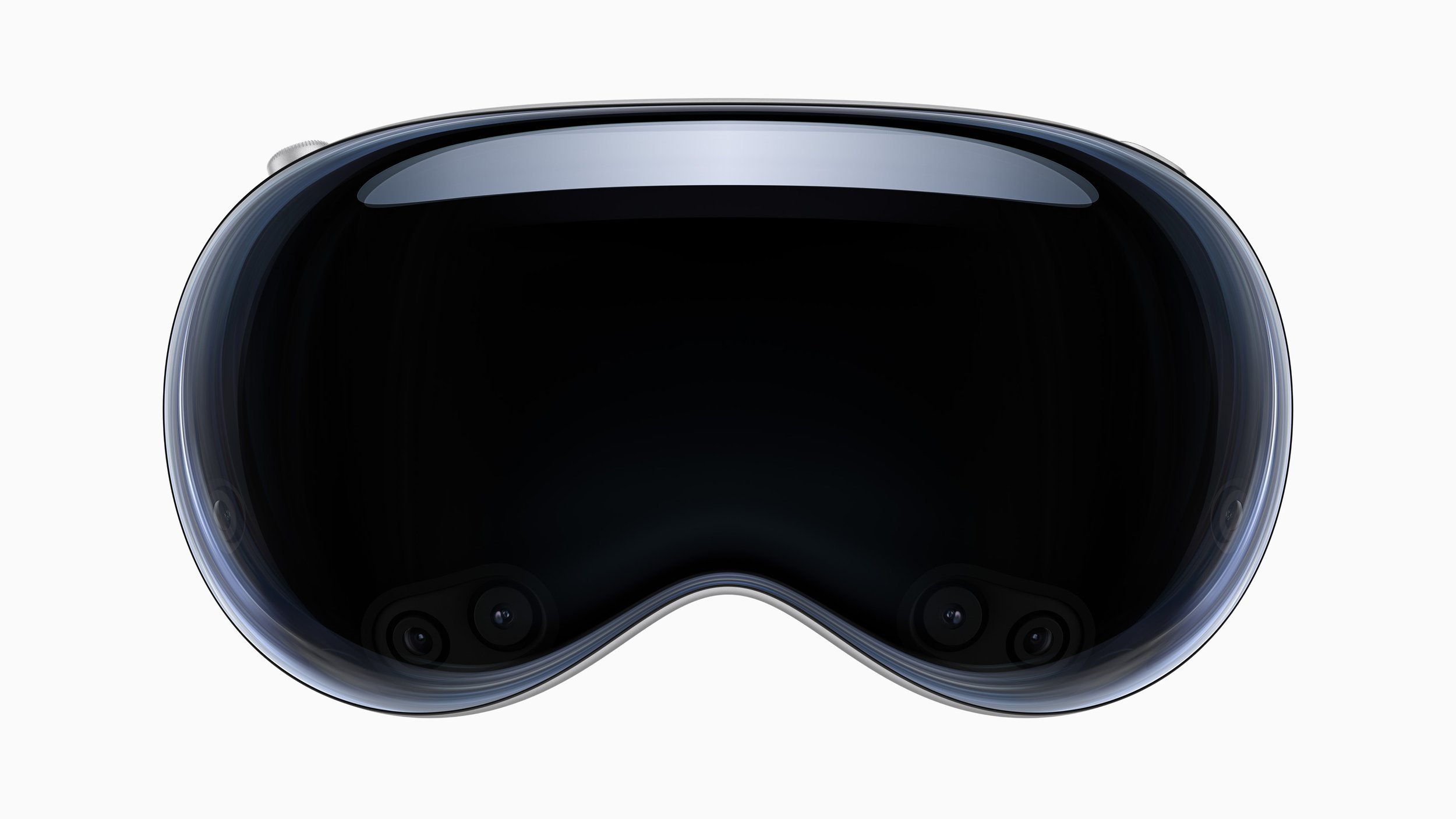

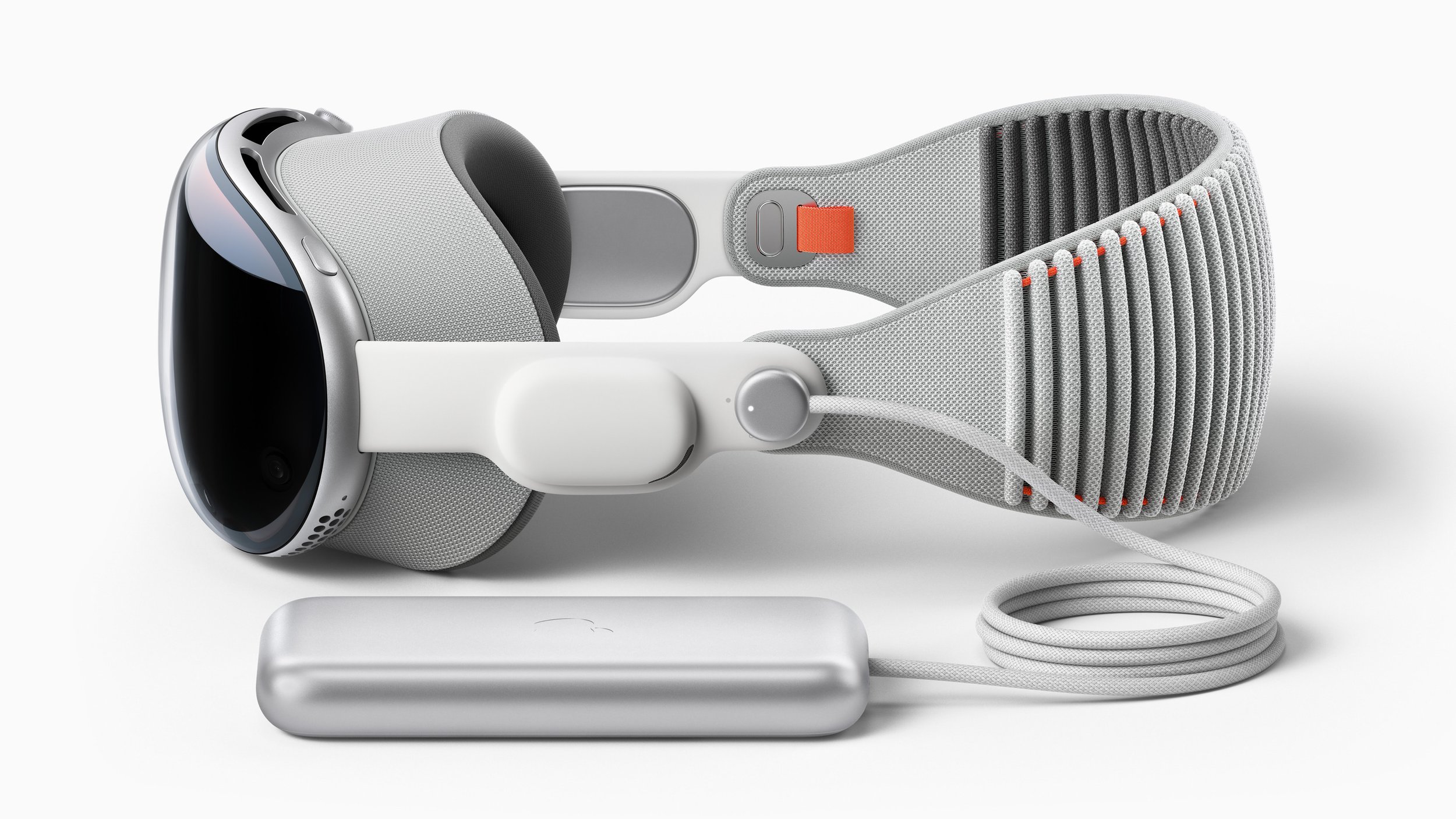

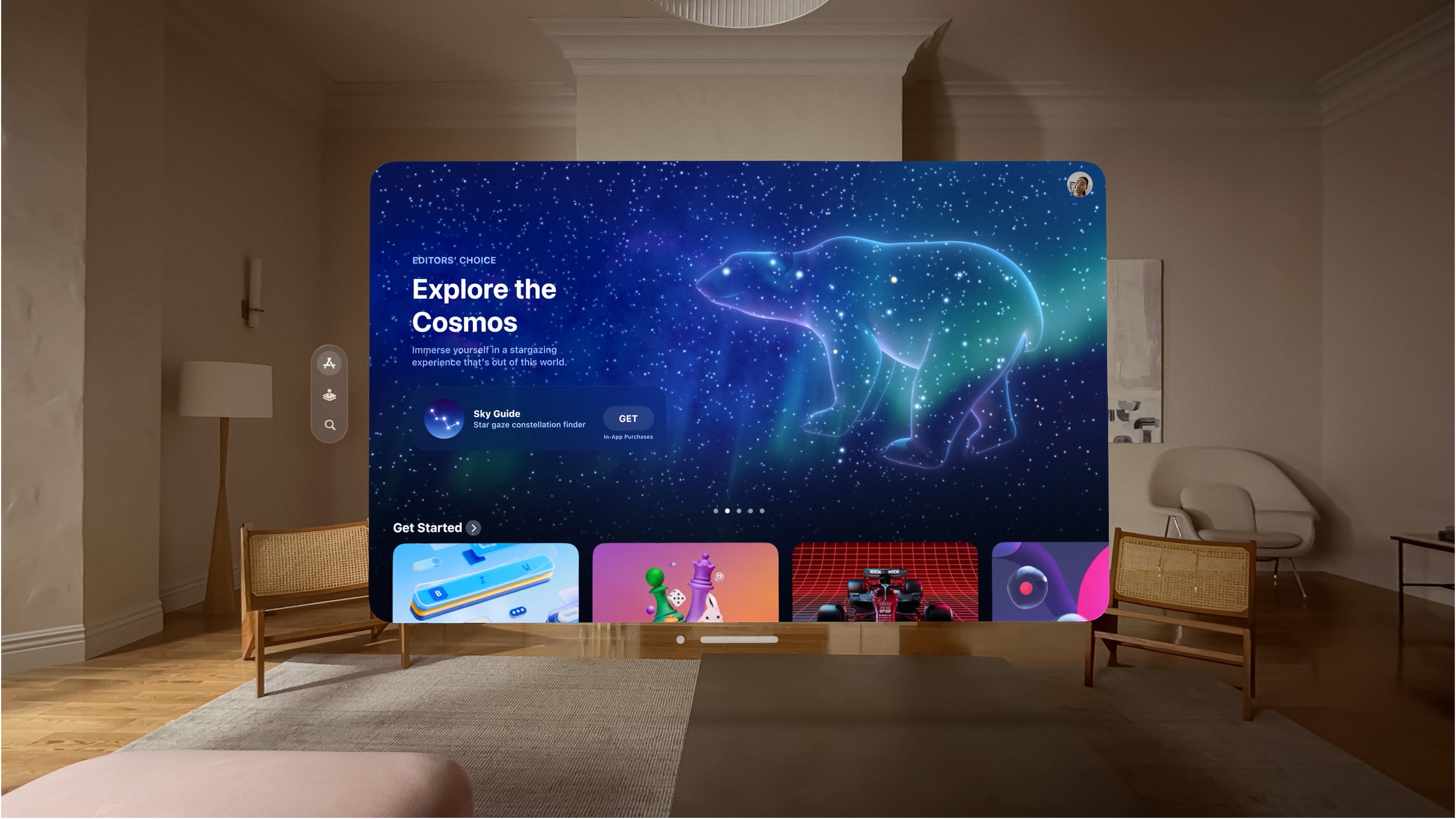

Apple Vision Pro

A revolutionary spatial computer that seamlessly blends digital content with the physical world, while allowing users to stay present and connected to others. Vision Pro creates an infinite canvas for apps that scales beyond the boundaries of a traditional display and introduces a fully three-dimensional user interface controlled by the most natural and intuitive inputs possible — a user’s eyes, hands, and voice.

Scribble with Apple Pencil

Scribble allows users to write in any text field where it will automatically be converted to typed text, making actions like replying to an iMessage or searching in Safari fast and easy.

LiDAR Scanner

The LiDAR Scanner measures the distance to surrounding objects up to 5 meters away, works both indoors and outdoors, and operates at the photon level at nano-second speeds. New depth frameworks in iPadOS combine depth points measured by the LiDAR Scanner, data from both cameras and motion sensors, and is enhanced by computer vision algorithms on the A12Z Bionic for a more detailed understanding of a scene. The tight integration of these elements enables a whole new class of AR experiences on iPad Pro.

ECG on Watch

Electrodes built into the Digital Crown and the back crystal work together with the ECG app to read your heart’s electrical signals. Simply touch the Digital Crown to generate an ECG waveform in just 30 seconds. The ECG app can indicate whether your heart rhythm shows signs of atrial fibrillation — a serious form of irregular heart rhythm — or sinus rhythm, which means your heart is beating in a normal pattern.

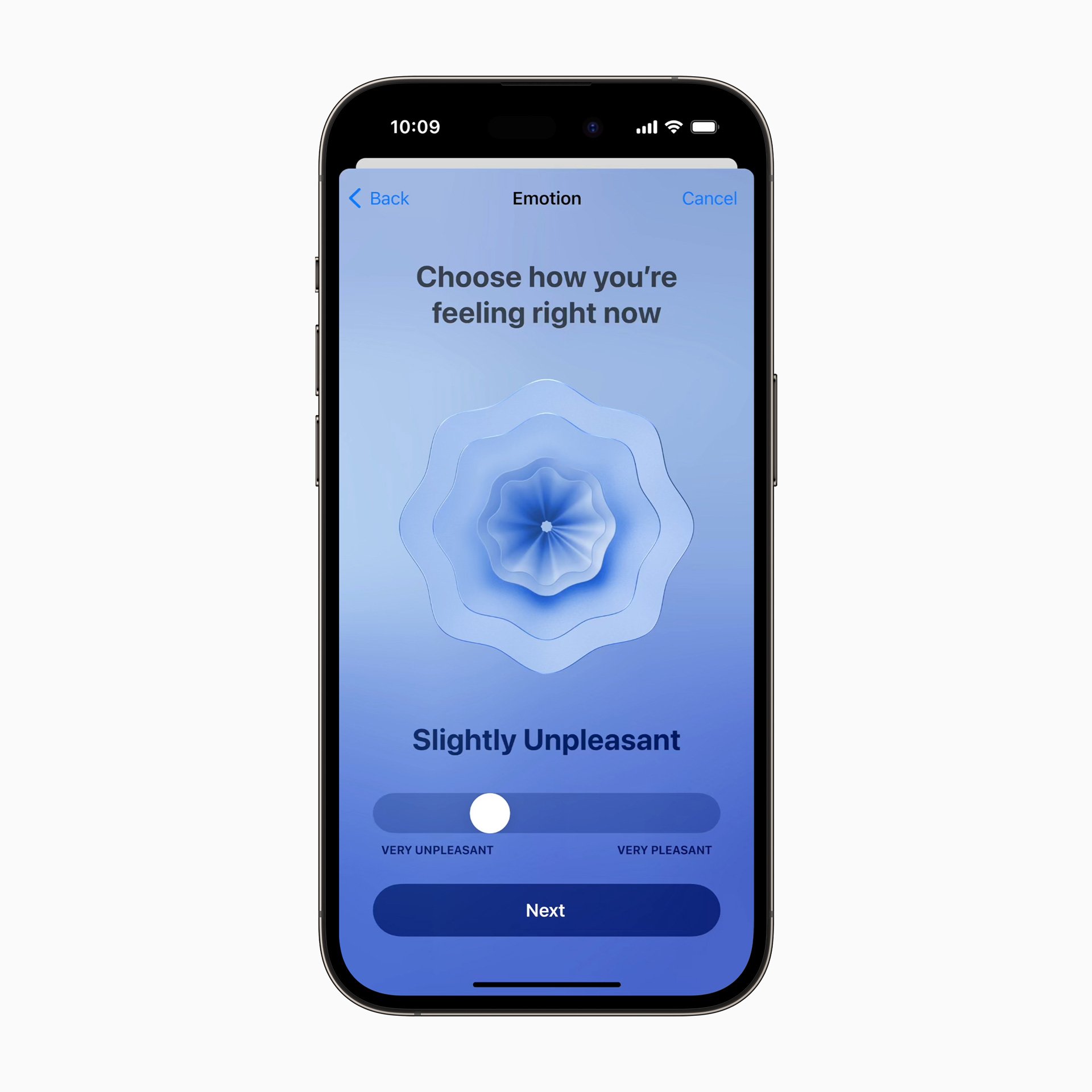

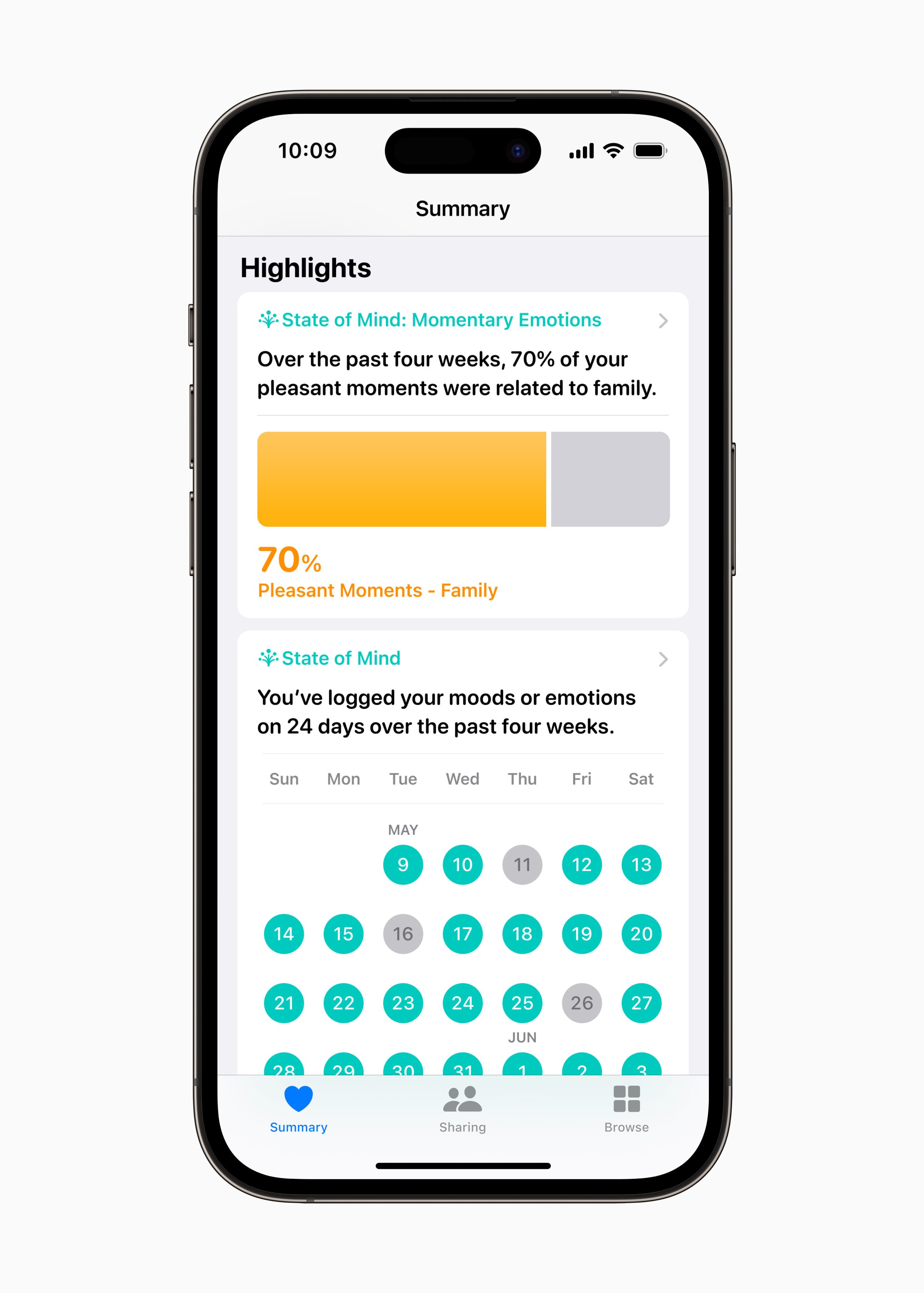

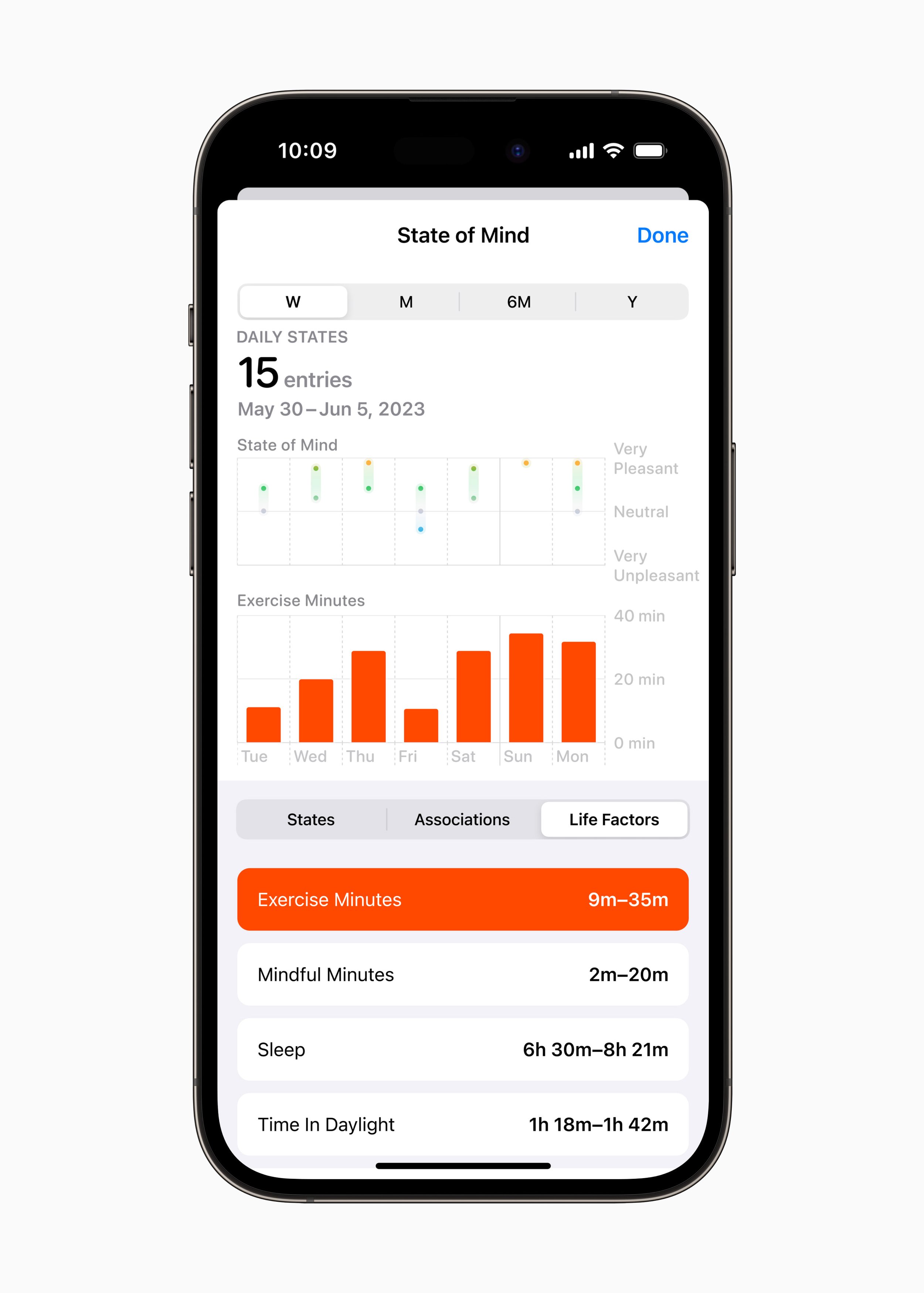

Mental Health

The Health app in iOS 17 and iPadOS 17, and the Mindfulness app in watchOS 10, bring an engaging and intuitive way for users to reflect on their state of mind.